ScanNet Indoor Scene Understanding Challenge

CVPR 2020 Workshop, Seattle, WA

Join Us Here: Live Stream

Introduction

3D scene understanding for indoor environments is becoming an increasingly important area. Application domains such as augmented and virtual reality, computational photography, interior design, and autonomous mobile robots all require a deep understanding of 3D interior spaces, the semantics of objects that are present, and their relative configurations in 3D space.

We present the first comprehensive challenge for 3D scene understanding of entire rooms at the object instance-level with 5 tasks based on the ScanNet dataset. The ScanNet dataset is a large-scale semantically annotated dataset of 3D mesh reconstructions of interior spaces (approx. 1500 rooms and 2.5 million RGB-D frames). It is used by more than 480 research groups to develop and benchmark state-of-the-art approaches in semantic scene understanding. A key goal of this challenge is to compare state-of-the-art approaches operating on image data (including RGB-D) with approaches operating directly on 3D data (point cloud, or surface mesh representations). Additionally, we pose both object category label prediction (commonly referred to as semantic segmentation), and instance-level object recognition (object instance prediction and category label prediction). We propose five tasks that cover this space:

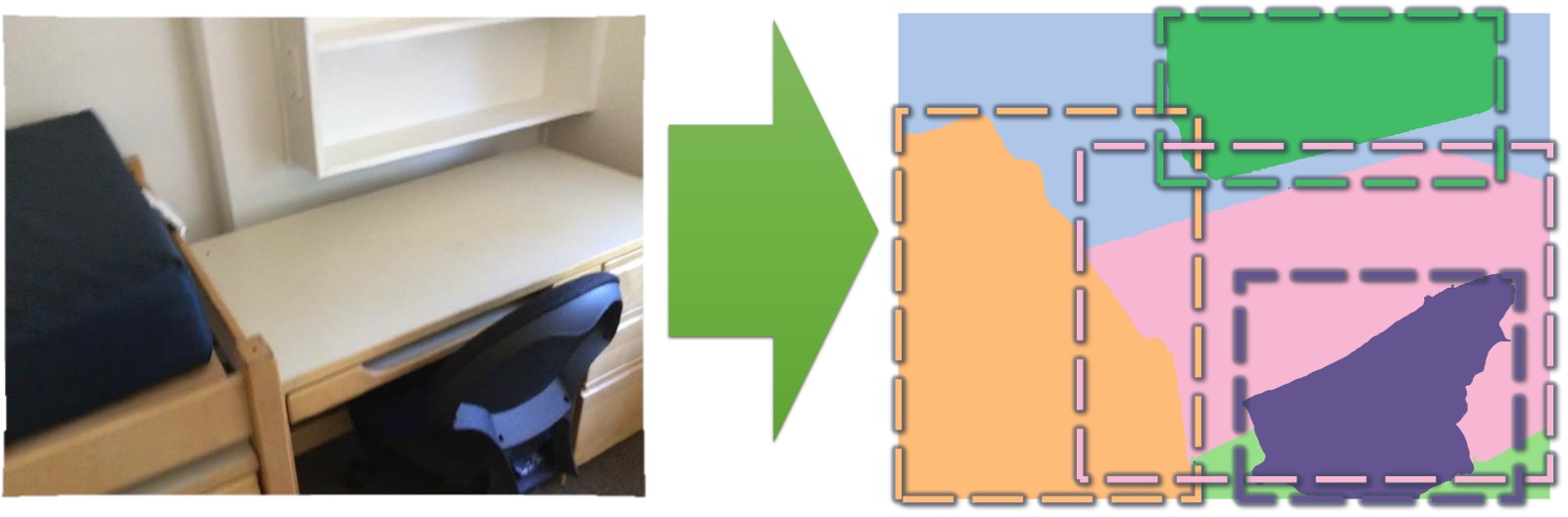

- 2D semantic label prediction: prediction of object category labels from 2D image representation

- 2D semantic instance prediction: prediction of object instance and category labels from 2D image representation

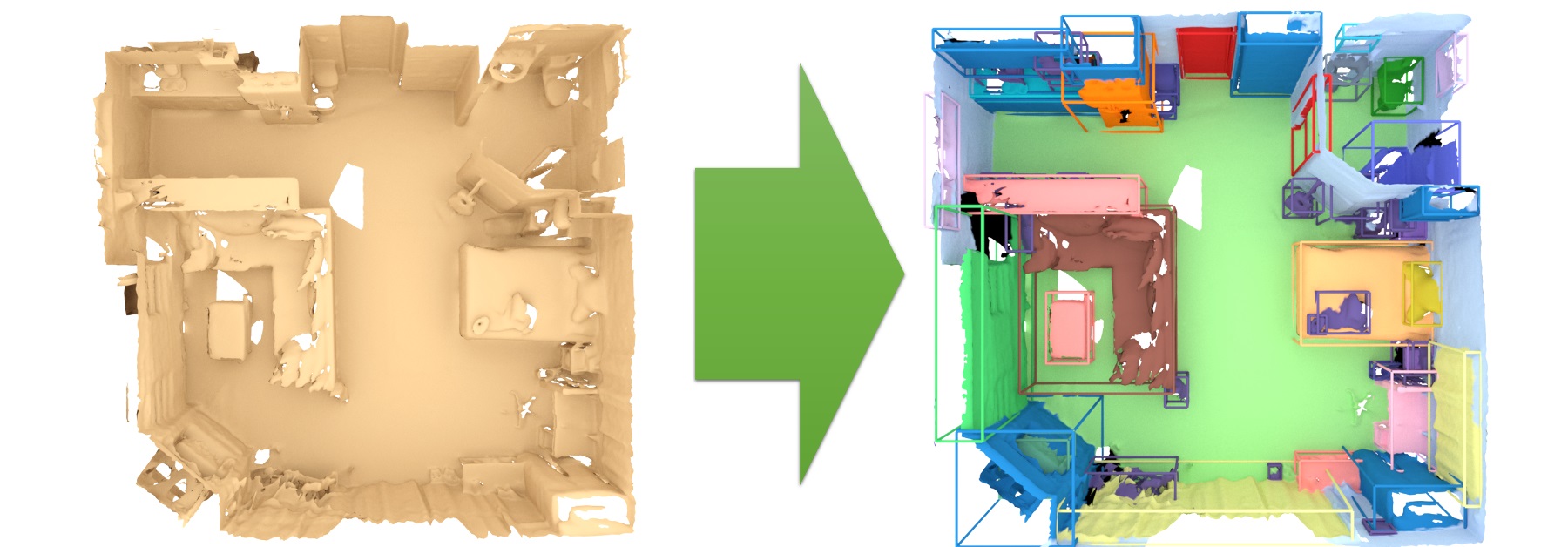

- 3D semantic label prediction: prediction of object category labels from 3D representation

- 3D semantic instance prediction: prediction of object instance and category labels from 3D representation

- Scene type classification: classification of entire 3D room into a scene type

For each task, challenge participants are provided with prepared training, validation, and test datasets, and automated evaluation scripts. In addition to the public train-val-test split, benchmarking is done on a hidden test set whose raw data can be downloaded without annotations; in order to participate in the benchmark, the predictions on the hidden test set are uploaded to the evaluation server, where they are evaluated. Submission is restricted to submissions every two weeks to avoid finetuning on the test dataset. See more details at http://kaldir.vc.in.tum.de/scannet_benchmark/documentation if you would like to participate in the challenge. The evaluation server leaderboard is live at http://kaldir.vc.in.tum.de/scannet_benchmark/.

2D semantic label prediction

2D semantic instance prediction

3D semantic label prediction

3D semantic instance prediction

Important Dates

| Poster Submission Deadline | May 20 2020 |

| Notification to Authors | May 25 2020 |

| Workshop Date | June 19 2020 |

Posters

To submit a poster to the workshop, please email the poster as .pdf file to scannet@googlegroups.com.

Schedule

| Welcome and Introduction | 1:50pm - 2:00pm |

| Implicit Neural Representations: From Objects to 3D Scenes (Andreas Geiger) | 2:00pm - 2:30pm |

| Winner Talk: KPConv (Hugues Thomas) | 2:30pm - 2:40pm |

| Winner Talk: SparseConvNet (Benjamin Graham) | 2:40pm - 2:50pm |

| Winner Talk: PointGroup (Li Jiang) | 2:50pm - 3:00pm |

| Break | 3:00pm - 3:15pm |

| Winner Talk: Sparse 3D Perception (Chris Choy) | 3:15pm - 3:25pm |

| Winner Talk: 3D-MPA (Francis Engelmann) | 3:25pm - 3:35pm |

| Winner Talk: OccuSeg (Tian Zheng) | 3:35pm - 3:45pm |

| Break | 3:45pm - 4:00pm |

| CVPR is a Contemporary Art Exhibition (Yasutaka Furukawa) | 4:00pm - 4:30pm |

| Semantic Scene Reconstruction from RGBD Scans (Thomas Funkhouser) | 4:30pm - 5:00pm |

| Panel Discussion and Conclusion | 5:00pm - 5:30pm |

Invited Speakers

Andreas Geiger is professor at the University of Tübingen and group leader at the Max Planck Institute for Intelligent Systems. Prior to this, he was a visiting professor at ETH Zürich and a research scientist at MPI-IS. He studied at KIT, EPFL and MIT and received his PhD degree in 2013 from the KIT. His research interests are at the intersection of 3D reconstruction, motion estimation, scene understanding and sensory-motor control. He maintains the KITTI vision benchmark.

Yasutaka Furukawa is an associate professor of Computing Science at Simon Fraser University. Prior to SFU, he was an assistant professor at Washington University in St. Louis. Before WUSTL, he was a software engineer at Google. Before Google, he was a post-doctoral research associate at University of Washington. He worked with Prof. Seitz and Prof. Curless at University of Washington, and Rick Szeliski at Facebook (was at Microsoft Research). He completed his Ph.D. under the supervision of Prof. Ponce at Computer Science Department of University of Illinois at Urbana-Champaign in May 2008.

Thomas Funkhouser is a senior research scientist at Google and the David M. Siegel Professor of Computer Science, Emeritus, at Princeton University. He received a PhD in computer science from UC Berkeley in 1993 and was a member of the technical staff at Bell Labs until 1997 before joining the faculty at Princeton. For most of his career, he focused on research problems in computer graphics, including foundational work on 3D shape retrieval, analysis, and modeling. His most recent research has focused on 3D scene understanding in computer vision. He has published more than 100 research papers and received several awards, including the ACM SIGGRAPH Computer Graphics Achievement Award, ACM SIGGRAPH Academy, ACM Fellow, NSF Career Award, Emerson Electric, E. Lawrence Keyes Faculty Advancement Award, Google Faculty Research Awards, University Council Excellence in Teaching Awards, and a Sloan Fellowship.

Organizers

Technical University of Munich

Eloquent Labs, Simon Fraser University

Simon Fraser University, Facebook AI Research

Technical University of Munich

Acknowledgments

Thanks to visualdialog.org for the webpage format.